| Stimulating the Human Visual System Beyond Real World Performance in Future Augmented Reality Displays David Dunn, Okan Tursun, Hyeonseung Yu, Piotr Didyk, Karol Myszkowski, Henry Fuchs IEEE International Symposium on Mixed and Augmented Reality (ISMAR). Recife, Brazil. 2020. |

||

| Abstract: New augmented-reality near-eye displays provide capabilities for enriching real-world visual experiences with digital content. Most current research focuses on improving both hardware and software to provide digital content that seamlessly blends with the real world. This is believed to not only contribute to the visual experience but also increase human task performance. In this work, we take a step further and ask the question of whether the capabilities of current and future display designs combined with efficient perception-inspired content optimizations can be used to improve human task performance beyond the human capabilities in the natural world. Based on an in-depth analysis of previous literature, we hypothesize here that such enhancements can be achieved when the human visual system is provided with content that optimizes the oculomotor responses. To further investigate possible gains, we present a series of perceptual experiments that built upon this idea. More specifically, we focus on speeding up accommodation response, which significantly contributes to the eye-adaptation when a new stimulus is shown. Through our experiments, we demonstrate that such speedups canbe achieved, and more importantly, they can lead to significant improvements in human task performance. While not all of our results give definite answers, we believe that they reveal plentiful opportunities for further enhancing the human experience and task performance when using new augmented-reality displays. | ||

| [Paper] [DOI] [BibTex] [Slides] [Video] | ||

| |

Required Accuracy of Gaze Tracking for Varifocal Displays David Dunn VisAug: Workshop on Eye Tracking and Vision Augmentation, IEEE VR Conference. Osaka, Japan. 2019. |

|

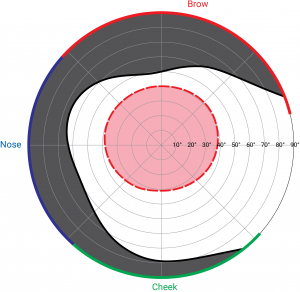

| Abstract: Varifocal displays are a practical method to solve vergence–accommodation conflict in near-eye displays for both virtual and augmented reality, but they are reliant on knowing the user’s focal state. One approach for detecting the focal state is to use the link between vergence and accommodation and employ binocular gaze tracking to determine the depth of the fixation point; consequently, the focal depth is also known. In order to ensure the virtual image is in focus, the display must be set to a depth which causes no negative perceptual or physiological effects to the viewer, which indicates error bounds for the calculation of fixation point. I analyze the required gaze tracker accuracy to ensure the display focus is set within the viewer’s depth of field, zone of comfort, and zone of clear single binocular vision. My findings indicate that for the median adult using an augmented reality varifocal display, gaze tracking accuracy must be better than 0.541°. In addition, I discuss eye tracking approaches presented in the literature to determine their ability to meet the specified requirements. | ||

| [Paper] [DOI] [BibTex] [Slides] | ||

|

Mitigating Vergence-Accommodation Conflict for Near-Eye Displays via Deformable Beamsplitters David Dunn, Praneeth Chakravarthula, Qian Dong, and Henry Fuchs Digital Optics for Immersive Displays Conference, Photonics Europe. Strasbourg, France. 2018. |

|

| Winner: First Prize – DOID Student Optical Design Challenge | ||

| Abstract: Deformable beamsplitters have been shown as a means of creating a wide field of view, varifocal, optical see- through, augmented reality display. Current systems suffer from degraded optical quality at far focus and are tethered to large air compressors or pneumatic devices which prevent small, self-contained systems. We present an analysis on the shape of the curved beamsplitter as it deforms to different focal depths. Our design also demonstrates a step forward in reducing the form factor of the overall system. | ||

| [Paper] [DOI] [BibTex] [Slides] [Video 1] [Video 2] | ||

|

Towards Varifocal Augmented Reality Displays using Deformable Beamsplitter Membranes David Dunn, Praneeth Chakravarthula, Qian Dong, Kaan Akşit, and Henry Fuchs SID Display Week. Los Angeles, CA. May 2018. |

|

| Abstract: Growing evidence in recent literature suggests gaze contingent varifocal Near Eye Displays (NEDs) are mitigating visual discomfort caused by the vergence‐accommodation conflict (VAC). Such displays promise improved task performance in Virtual Reality (VR) and Augmented Reality (AR) applications and demand less compute and power than light field and holographic display alternatives. In the context of this paper, we further extend the evaluation of our gaze contingent wide field of view varifocal AR NED layout by evaluating optical characteristics of resolution, brightness, and eye‐box. Our most recent prototype dramatically reduces form‐factor, while improving maximum depth switching time to under 200 ms. | ||

| [Paper] [DOI] [BibTex] | ||

|

Membrane AR: Varifocal, Wide Field Of View Augmented Reality Display from Deformable Membranes David Dunn, Cary Tippets, Kent Torell, Petr Kellnhofer, Kaan Akşit, Piotr Didyk, Karol Myszkowski, David Luebke, and Henry Fuchs SIGGRAPH Emerging Technologies, Los Angeles, CA. August 2017. |

|

| Winner: Digital Content Expo of Japan Special Prize | ||

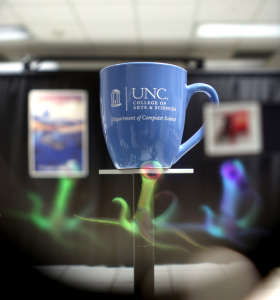

| Abstract: Accommodative depth cues, a wide field of view, and ever-higher resolutions present major design challenges for near-eye displays. Optimizing a design to overcome one of them typically leads to a trade-off in the others. We tackle this problem by introducing an all-in-one solution – a novel display for augmented reality. The key components of our solution are two see-through, varifocal deformable membrane mirrors reflecting a display. They are controlled by airtight cavities and change the effective focal power to present a virtual image at a target depth plane. The benefits of the membranes include a wide field of view and fast depth switching. | ||

| [Paper] [DOI] [BibTex] | ||

|

Wide Field Of View Varifocal Near-Eye Display Using See-Through Deformable Membrane Mirrors David Dunn, Cary Tippets, Kent Torell, Petr Kellnhofer, Kaan Akşit, Piotr Didyk, Karol Myszkowski, David Luebke, and Henry Fuchs IEEE Transactions on Visualization and Computer Graphics, April 2017 (Selected Proceedings, IEEE Virtual Reality 2017, Los Angeles, CA). |

|

| Winner: Best Paper Award | ||

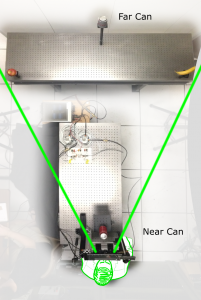

| Abstract: Accommodative depth cues, a wide field of view, and ever-higher resolutions all present major hardware design challenges for near-eye displays. Optimizing a design to overcome one of these challenges typically leads to a trade-off in the others. We tackle this problem by introducing an all-in-one solution – a new wide field of view gaze-tracked near-eye display for augmented reality applications. The key component of our solution is the use of a single see-through varifocal deformable membrane mirror for each eye reflecting a display. They are controlled by airtight cavities and change the effective focal power to present a virtual image at a target depth plane which is determined by the gaze tracker. The benefits of using the membranes include wide field of view (100° diagonal) and fast depth switching (from 20 cm to infinity within 300 ms). Our subjective experiment verifies the prototype and demonstrates its potential benefits for near-eye see-through displays. | ||

| [Paper] [DOI] [BibTex] [Slides] [Video] |